Using Drupal? Here’s the Best Modules for Onsite SEO Optimization

Without a doubt, Drupal is one of the most popular content management systems around; there are millions of websites using it. Many users face one common issue – selecting Drupal modules that are both easy to use and the best at improving the visibility of their website in the search engines.

Although this seems like a difficult and time-consuming task, I can assure you that once you are aware of all aspects that require optimization, and which Drupal modules are the most efficient at facilitating your work, you can complete this project in just a couple of hours.

To start, here is a list of all things that you should fix on your website:

- Different titles on all pages

- Write the site Meta description (no more than 160 characters)

- Use different headings

- Add ALT attribute to all images (aka image description)

- WWW resolve (redirect the version of your website with www. to without, or vica versa)

- Include robots.txt

- Add XML sitemap

- Declare the correct language

- Integrate Google Analytics

- Decrease page load time (this is the hardest)

Now that I have covered all items that require your attention, here’s all the best modules that will help you optimize your Drupal CMS:

1. The Page Title module. This allows you to easily change the titles of all the pages on your website. It is really simple, so with just a couple of clicks you will have different titles for all your pages.

2. The Meta description tag allows the search engine users to quickly see what your website is all about and decide whether they want to visit it or not. Therefore, it is very important to create relevant descriptions for all your pages. The best Drupal module for this is called Nodewords. You will have the ability to write unique Meta descriptions for all existing pages on your website and you will have additional fields when creating a new one.

3. To create different headings on your website, the only thing that you have to do is to create different nodes on every page. Furthermore, you need to separate each node with sub-titles – piece of cake.

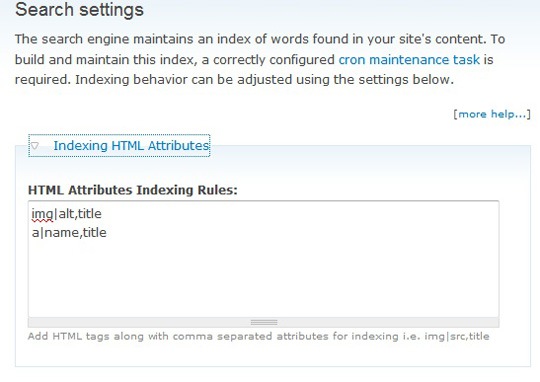

4. When you search Drupal’s website for image modules, you will find many choices. The module I strongly recommend is called Indexing HTML Attributes which allows you to inspect the HTML code of your whole website and add attributes wherever you need (in this case, title and description of image objects).

5. A common problem for many website owners is one they may not be aware of– the fact that their website, if not redirected properly, creates site wide duplicate content which has negative consequences for your search rankings. To fix that, you will have to redirect the www. version of your website to the non-www. or the opposite (it is up to you). To do that, you need to find the .htaccess file of your website and configure it. However, to make it easier for you, Drupal provides instructions on how to do it . Here is the code (remember to change example.com with the URL of your website):

# adapt and uncomment the following:

# RewriteCond %{HTTP_HOST} ^example\.com$ [NC]

# RewriteRule ^(.*)$ http://www.example.com/$1 [L,R=301]

# To redirect all users to access the site WITHOUT the ‘www.’ prefix,

# (http://www.example.com/… will be redirected to http://example.com/&hellip

# adapt and uncomment the following:

# RewriteCond %{HTTP_HOST} ^www\.example\.com$ [NC]

# RewriteRule ^(.*)$ http://example.com/$1 [L,R=301]

6. To create a robots.txt you will need RobotsTXT module. It creates the file for you, place it in your root directory and allows you to dynamically edit it without using Drupal. However, before making any changes, I strongly recommend learning more about the structure of this file, because you might block the access to some search engine spiders to your website.

7. For your sitemap, use the XML sitemap module. Once installed, the module will automatically create a sitemap for your website and it will allow you to submit it to the most popular search engines – Yahoo, Google, Bing and Ask.

8. If you are running a website which is using a language other than English you have to notify the search engines. If you are using pure HTML it is quite simple, but with Drupal you will need a special module called Internationalization, which will allow you to extend the language capacities of the Drupal core and declare the language of your website.

9. Adding Google Analytics is really easy – you need the Google Analytics module. Configuring it can be tricky, especially if you want to collect more advanced data about your visitors and the actions they are performing on your website. But to start using the module is easy– you will only need your Google Analytics account number.

10. Finally, it is time to decrease the load time of your website. This may be the hardest task on our list, but it has one of the highest values for SEO. The modules I recommend using are Block Cache and Advanced Cache. These two modules will create cached versions of the blocks on your website and thus reduce the time for loading. In addition it is very important to validate your website at The W3C Validator, because that way you will be sure that there are no mistakes in the code. However, if you do find mistakes and you do not have the expertise to fix them, I recommend seeking out someone who can, rather than attempting to fix it yourself and possibly making it worse.

A tip: As you work through the above, use the SEO Checklist Module. It will not only allow you to keep track of what you have completed so far, but will also introduce you to some of the best Drupal SEO practices.

Check out the SEO Tools guide at Search Engine Journal.

Using Drupal? Here’s the Best Modules for Onsite SEO Optimization

There was once a directory called DMOZ, which shone forth like a beacon of glorious link-light. From all across the realm, downtrodden webmasters would travel to pay homage to the ones called Editors, that those keepers of the Categories might bequeath a link of great PageRank upon them. Woe upon those who did not have the light of the most open directory, as their websites would surely wither and perish.

There was once a directory called DMOZ, which shone forth like a beacon of glorious link-light. From all across the realm, downtrodden webmasters would travel to pay homage to the ones called Editors, that those keepers of the Categories might bequeath a link of great PageRank upon them. Woe upon those who did not have the light of the most open directory, as their websites would surely wither and perish.